Back in November, Morgan McGuire et al published a paper which provides a great overview on Order Independent Transparency, a thorough surveying of various weighting techniques, and contributes a visually plausible weighting scheme based on depth. If you haven’t read it yet, go check it out.

We thought this was terrific and wanted to bring it into our WebGL render pipeline using one render target.

Dan Bagnell over at the Cesium project quickly got up a blog post on their webGL implementation of OIT. It sports a nice overview and interactive demo. Go check it out.

Cesium’s implementation simulates multiple render targets through rasterizing transparent geometry twice, once to each render target with a different shader respectively. This works, and for simple geometry, seems justifiable. For our use case, where we expect geometrically complex transparent objects with plenty of material variation, 2x state changes and draw calls seemed prohibitively expensive.

The method we end up with is useful on systems that support multiple render targets as well. By getting rid of the 2nd target and packing everything into a single floating point RGBA texture, we get a nice optimization on bandwidth and memory, at the expense of some ALUs and 1 or 4 extra texture samples, which should be inside cache. We save 48bpp on systems that support the gl.HALF_FLOAT data type, and 96bpp on systems that only support the full gl.FLOAT data type. Not too shabby.

Demo

WebGL OIT with one render target

Bringing OIT to the web is an interesting challenge. The core implementation illustrated in McGuire et al’s paper makes use of two render targets:

-

One RGBA Floating point target stores the sum of the weighted color of all overlapping fragments, as well as the weighted “revealage” factor used for normalization in the compositing stage.

-

The second RGB Floating point target stores the raw, unweighted “revealage” factor, which is a scalar. Only the red channel is used. Multiple render target support can be enabled for webGL via the WEBGL_draw_buffers [http://www.khronos.org/registry/webgl/extensions/WEBGL_draw_buffers/] extension, however it is not widely supported as polled by webGLStats.com.

McGuire et al additionally outline a single target implementation which supports monochrome-only transparency:

gl_FragData[0] = vec4(vec2(Ci.r, ai) * w(zi, ai), 0, ai);

This monochrome implementation is the basis for my contribution. What stood out to me was the wasted blue channel. An RGBA target is required in order to assign one blend function to the weighted monochrome accumulation, and a different blend function to the “revealage” in the alpha channel like so:

gl.blendFuncSeparate(gl.ONE, gl.ONE, gl.ZERO, gl.ONE_MINUS_SRC_ALPHA);

If we could store the weighted color accumulation in only 2 channels, we could get everything needed into a single RGBA float target. We can, and luckily, I happened to already be using this technique in our g-buffer encoding, so I was familiar with the code.

We can achieve this through interlaced, chroma subsampling as shown here. The idea is to convert into a color space that separates luminance, and chroma. The luminance is stored at every pixel, but only one of the two chroma components is alternately stored (interlaced). In the compositing stage, we can reconstruct an approximation of the full 3 channel signal by sampling a neighboring pixel to retrieve the other chroma component. Subsampling chroma is a good approximation in that it is perceptually based. We have a much harder time seeing changes in chroma than changes in luminosity. Furthermore, chroma tends to change at a lower frequency than luminance in typical scenes.

Naively sampling a single neighboring pixel will create a color fringe artifact on edges of chroma changes. The paper above uses a red flag against a blue sky to illustrate this.

These artifacts will also occur, even more severely if the neighboring pixel sampled has no data. In our case that would be a pixel where no transparent objects were drawn, resulting in a single pixel outline around the silhouette of transparent object accumulation in the scene. The paper above also supplies a straightforward solution to this problem: retrieve the missing chroma component through a weighted, cross neighborhood sampling.

Finally, here are the relevant code snippets:

Accumulation Pass

The acculumation pass writes to an RGBA Floating Point Target, blend mode set to gl.blendFuncSeparate(gl.ONE, gl.ONE, gl.ZERO, gl.ONE_MINUS_SRC_ALPHA)

vec3 diffuseSample = ... // Calculate diffuse lighting contribution.

vec3 specularSample = ... // Calculate specular lighting contribution. In our case this is the indirect specular reflections from environment probes.

vec3 emissionSample = … // Calculate emission lighting contribution.

// Premultiply.

vec3 outputColor = (diffuseSample + specularSample + emission) * alpha;

vec3 colorYCoCg = rgbToYcocg(outputColor);

// Interlace chroma.

vec2 colorYC = checkerboard() > 0.0 ? colorYCoCg.xy : colorYCoCg.xz;

float weight = orderIndependentTransparencyWeight(alpha, depth);

gl_FragColor.rg = colorYC * weight;

gl_FragColor.b = alpha * weight;

gl_FragColor.a = alpha;

Compositing Pass

The compositing Pass, in our case, writes to an RGBA floating point target (our HDR + Alpha target), but could be rolled into tone-mapping phase if no other post processes need to occur. No blending is done in our case, as we are not drawing to canvas yet. Rendered via a screen filling quad.

vec4 sampleCenter = texture2D(accumulationPassSampler, vUV);

// Sample 4-Neighborhood.

vec2 sampleNorthUV = vec2(vUV.x, accumulationPassInverseResolution.y + vUV.y);

vec2 sampleSouthUV = vec2(vUV.x, -accumulationPassInverseResolution.y + vUV.y);

vec2 sampleWestUV = vec2(-accumulationPassInverseResolution.x + vUV.x, vUV.y);

vec2 sampleEastUV = vec2( accumulationPassInverseResolution.x + vUV.x, vUV.y);

vec4 sampleNorth = texture2D(accumulationPassSampler, sampleNorthUV);

vec4 sampleSouth = texture2D(accumulationPassSampler, sampleSouthUV);

vec4 sampleWest = texture2D(accumulationPassSampler, sampleWestUV);

vec4 sampleEast = texture2D(accumulationPassSampler, sampleEastUV);

vec3 ycocg;

// Normalize the center sample.

ycocg.xy = sampleCenter.xy / clamp(sampleCenter.z, 1e-4, 5e4);

// Normalize the neighboring (luma,chroma) samples.

vec2 sampleNorthYC = sampleNorth.xy / clamp(sampleNorth.z, 1e-4, 5e4);

vec2 sampleSouthYC = sampleSouth.xy / clamp(sampleSouth.z, 1e-4, 5e4);

vec2 sampleWestYC = sampleWest.xy / clamp(sampleWest.z, 1e-4, 5e4);

vec2 sampleEastYC = sampleEast.xy / clamp(sampleEast.z, 1e-4, 5e4);

// Reconstruct YCoCg color through luminance similarity weighting.

ycocg.yz = reconstructChromaHDR(ycocg.xy, sampleNorthYC, sampleSouthYC, sampleWestYC, sampleEastYC);

// Swizzle chroma dependant on which component (Co or Cg) we reconstructed.

ycocg.yz = checkerboard() > 0.0 ? ycocg.yz : ycocg.zy;

// Already scaled by alpha from weight stage.

vec3 transparencyColor = ycocgToRgb(ycocg);

float backgroundReveal = sampleCenter.a;

vec4 background = texture2D(backgroundSampler, vUV);

background.rgb *= background.a;

// Composite transparency against background.

vec3 compositeColor = mix(transparencyColor.rgb, background.rgb, backgroundReveal);

float compositeAlpha = 1.0 - ((1.0 - background.a) * backgroundReveal);

// Outputs premultiplied alpha

gl_FragColor = vec4(compositeColor, compositeAlpha);

Supporting Functions

vec3 rgbToYcocg(const in vec3 rgbColor) {

vec3 ycocg;

float r0 = rgbColor.r * 0.25;

float r1 = rgbColor.r * 0.5;

float g0 = rgbColor.g * 0.5;

float b0 = rgbColor.b * 0.25;

float b1 = rgbColor.b * 0.5;

ycocg.x = r0 + g0 + b0;

ycocg.y = r1 - b1;

ycocg.z = -r0 + g0 - b0;

return ycocg;

}

vec3 ycocgToRgb(const in vec3 ycocgColor) {

vec3 rgb_color;

rgb_color.r = ycocgColor.x + ycocgColor.y - ycocgColor.z;

rgb_color.g = ycocgColor.x + ycocgColor.z;

rgb_color.b = ycocgColor.x - ycocgColor.y - ycocgColor.z;

return rgb_color;

}

float checkerboard() {

float swatch = -1.0;

if (mod(gl_FragCoord.x, 2.0) != mod(gl_FragCoord.y, 2.0)) {

swatch = 1.0;

}

return swatch;

}

// Journal of Computer Graphics Techniques

// The Compact YCoCg Frame Buffer

// http://jcgt.org/published/0002/02/09/

// Listing #2

// Modified to support HDR luminance values, as opposed to the source’s gamma corrected LDR images.

vec2 reconstructChromaHDR(const in vec2 center, const in vec2 a1, const in vec2 a2, const in vec2 a3, const in vec2 a4) {

vec4 luminance = vec4(a1.x, a2.x, a3.x, a4.x);

vec4 chroma = vec4(a1.y, a2.y, a3.y, a4.y);

vec4 lumaDelta = luminance - vec4(center.x);

vec4 lumaDelta2 = lumaDelta * lumaDelta;

vec4 lumaDelta4AddOne = lumaDelta2 * lumaDelta2 + 1.0;

vec4 weight = 1.0 / lumaDelta4AddOne;

// Handle the case where sample is black.

weight = step(1e-5, luminance) * weight;

float totalWeight = weight.x + weight.y + weight.z + weight.w;

// Handle the case where all weights are 0.

if (totalWeight <= 1e-5) {

return vec2(0.0);

}

return vec2(center.y, dot(chroma, weight) / totalWeight);

}

#if OIT_WEIGHT_FUNCTION == OIT_WEIGHT_FUNCTION_CUSTOM

float orderIndependentTransparencyWeight(

const in float alpha,

const in float depthLinear,

const in float depthWeightScale,

const in float depthWeightExponent,

const in float depthWeightMin,

const in float depthWeightMax

) {

float depthWeight = depthWeightScale / (1e-5 + pow(depthLinear, depthWeightExponent));

depthWeight = clamp(depthWeight, depthWeightMin, depthWeightMax);

return alpha * depthWeight;

}

#elif OIT_WEIGHT_FUNCTION == OIT_WEIGHT_FUNCTION_A

// Journal of Computer Graphics Techniques

// Weighted Blended Order-Independent Transparency

// http://jcgt.org/published/0002/02/09/

// Weighting function #7

float orderIndependentTransparencyWeight(const in float alpha, const in float depthLinear) {

float a = depthLinear * 0.2;

float a2 = a * a;

float depthWeight = 10.0 / (1e-5 + a2 + pow(depthLinear/200.0, 6.0));

depthWeight = clamp(depthWeight, 0.01, 3000.0);

return alpha * depthWeight;

}

#elif OIT_WEIGHT_FUNCTION == OIT_WEIGHT_FUNCTION_B

// Journal of Computer Graphics Techniques

// Weighted Blended Order-Independent Transparency

// http://jcgt.org/published/0002/02/09/

// Weighting function #8

float orderIndependentTransparencyWeight(const in float alpha, const in float depthLinear) {

float depthWeight = 10.0 / (1e-5 + pow(depthLinear * 0.1, 3.0) + pow(depthLinear / 200.0, 6.0));

depthWeight = clamp(depthWeight, 0.01, 3000.0);

return alpha * depthWeight;

}

#elif OIT_WEIGHT_FUNCTION == OIT_WEIGHT_FUNCTION_C

// Journal of Computer Graphics Techniques

// Weighted Blended Order-Independent Transparency

// http://jcgt.org/published/0002/02/09/

// Weighting function #9

float orderIndependentTransparencyWeight(const in float alpha, const in float depthLinear) {

float depthWeight = 0.03 / (1e-5 + pow(depthLinear / 200.0, 4.0));

depthWeight = clamp(depthWeight, 0.01, 3000.0);

return alpha * depthWeight;

}

#else OIT_WEIGHT_FUNCTION == OIT_WEIGHT_FUNCTION_D

// Journal of Computer Graphics Techniques

// Weighted Blended Order-Independent Transparency

// http://jcgt.org/published/0002/02/09/

// Weighting function #10

float orderIndependentTransparencyWeight(const in float alpha, const in float depthFragCoord) {

float depthWeight = pow(1.0 - depthFragCoord, 3.0);

depthWeight = clamp(depthWeight, 0.01, 3000.0);

return alpha * depthWeight;

}

#endif

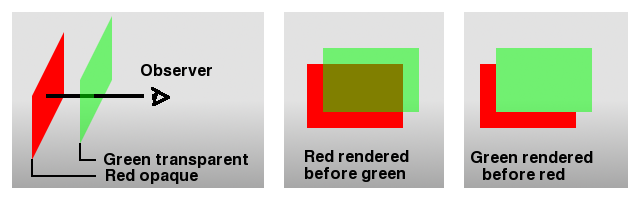

Addendum: the problems with order-dependent transparency

Oftentimes, transparency is handled through sorting objects on depth, and rendering back to front. This can be inconvenient but doable. For self contained, largely convex objects (think props) this can work reasonably well. Object-to-object partial occlusion will be correct as long as interpenetration does not occur along the view ray.

Unfortunately, anytime interpenetration occurs, under-sampling the draw order on an object level produces incorrect results. This can be object to object penetration, or triangle to triangle interpenetration within an object.

Scenes can be designed with a goal to mitigate interpenetration, however, this requires artist training, and artistic license. At Floored, we represent real spaces, and cannot re-architect our way around these problems. Furthermore, our scenes are editable by “normal” end users, so this approach is not an option.

Interpenetration can be particularly problematic in particle rendering. If the simulation is CPU side, you can sort per particle, but it won’t be cheap. Worse, it’s ideal to have few, large billboards represent particle systems in order to mitigate overdraw. Chances are, the billboards representing your particles interpenetrate. Errors introduced from under-sampled sorting will emphasize the flat nature of the system’s underlying geometry. If the simulation is GPU side you’re into much more involved approaches such as stochastic rendering.

At Floored, we don’t generally visualize gas-grenades and smoke columns occurring within swanky NY developments. We might get on some list if we did. We do however, deal in lots of glass. Bright forms that suggest a larger space than their geometric constraints dictate are generally desirable. This makes Apple-store-like-elements of involved, interlocking transparency more common than you might expect. Thanks Steve.

We were especially excited to see Maguire’s paper because order-independent transparency is good fit for most of our use cases; it gives us correct results in the simple case of a single overlay of transparent geometry, and degrades gracefully in the more involved cases.