Halloween Party

Floored’s Halloween party was a success this year. It had all the old Halloween standbys: a pumpkin carvin, a costume contest, and plenty of cider and drinks.

Floored’s Halloween party was a success this year. It had all the old Halloween standbys: a pumpkin carvin, a costume contest, and plenty of cider and drinks.

At Floored we read a lot of different stuff from all over the web. Each month we put together a list of the coolest stuff we’ve seen recently. Whether they’re new, or just new to us, some will probably be new to you. Enjoy!

Dynamic lighting becomes a puzzle in this forthcoming iPad app.

A neat filtering tool.

Created for a SIGGRAPH presentation, this page presents a 3D story about a lonely “space puppy” in a psychedelic, underwater world, which is a thin allegory for the state of 3D on the web.

More amazing things are being done with Oculus.

Nifty 3D gallery for artist Olafur Eliasson, whose work is worthy of the “cool thing” title all by itself.

Realtime face-tracking and projection mapping

A cool clock animation using bezier curves.

Good short video from John Carmack on the new Samsung mobile VR display.

Great 3D WebGL experience from Nike.

This monocular slam algorithm was really cool.

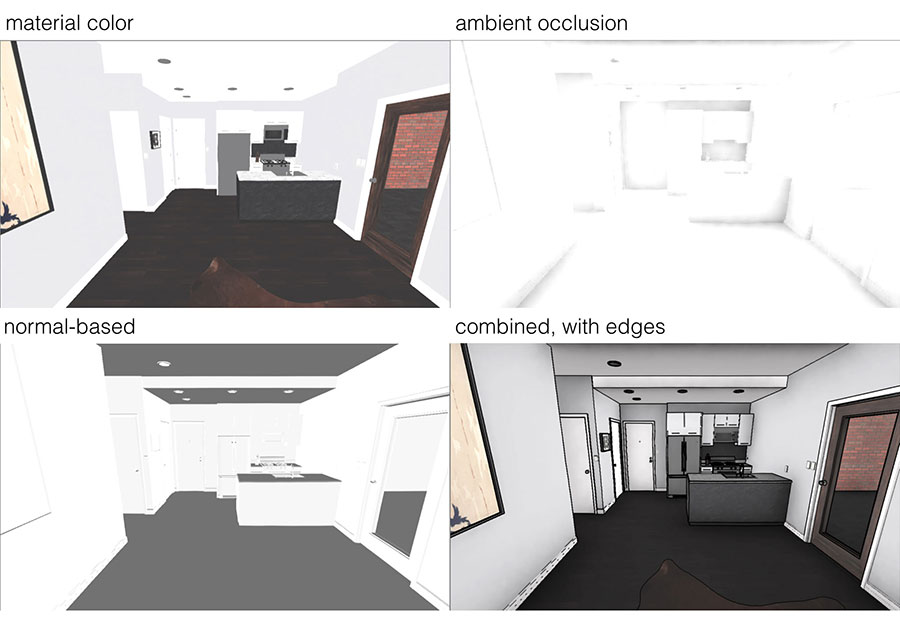

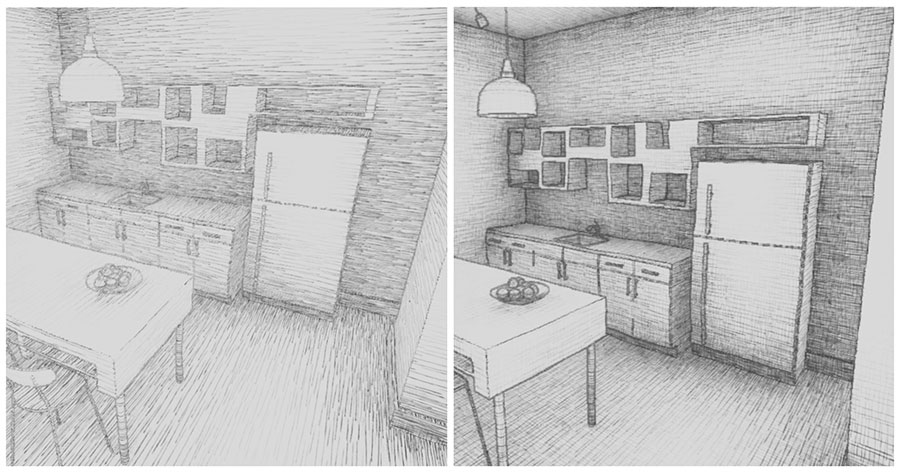

At Floored, our main rendering pipeline aims for realism and is great for many different applications. However, real-time photorealistic rendering in the browser is very performance intensive and sometimes we just need to understand layout and space without needing lights and materials. Because we designed our rendering engine to be data-driven, we were able to easily add a new “sketchy” look that is great for layout planning and produces a pleasing, artistic aesthetic.

Our “sketch” pipeline differs from our main rendering pipeline in three key ways:

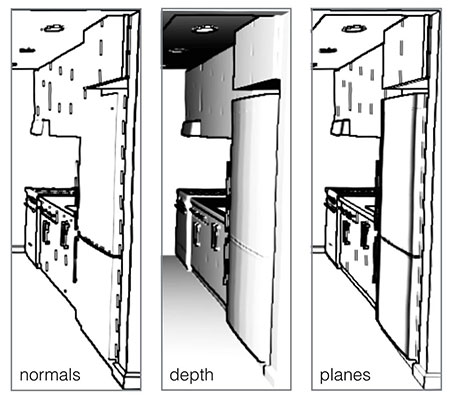

A component of many non-photorealistic rendering styles is outlines. We tested a few different ways to achieve this.

We found that convolving a Sobel filter with the normals produced confusing results. It fails on instances such as two walls of differing depths with the same normal. Due to aliasing, lines found are broken up, which results in more moving, broken outlines.

Using depth is also insufficient since it can produce extraneous edge shading on flat but foreshortened surfaces.

A plane-distance metric produced more usable results than computing the difference in normals or difference depths. Between point A and B, this measures the distance from A’s position in space (calculated in the frame from depth) to the plane formed by B’s normal (or vice versa). This results in edge detection fairly similar to change in depth but incorporates change in normals and excludes the extraneous shading on flat surfaces.

float planeDistance(const in vec3 positionA, const in vec3 normalA,

const in vec3 positionB, const in vec3 normalB) {

vec3 positionDelta = positionB-positionA;

float positionDistanceSquared = dot(positionDelta, positionDelta);

float planeDistanceDelta = max(abs(dot(positionDelta, normalA)), abs(dot(positionDelta, normalB)));

return planeDistanceDelta;

}

void main() {

float depthCenter = decodeGBufferDepth(camera_uGBuffer, vUV, camera_uClipFar);

// ...

// get positions and normals at cross neighborhood

// ...

vec2 planeDist = vec2(

planeDistance(posWest, geomWest.normal, posEast, geomEast.normal),

planeDistance(posNorth, geomNorth.normal, posSouth, geomSouth.normal)

);

float edge = 240.0 * length(planeDist);

edge = smoothstep(0.0, depthCenter, edge);

gl_FragColor = vec4(vec3(1.0 - edge), 1.0);

}

Line-finding was not necessary in our case, as long as we choose an appropriate weighting to bring out the edges. We alpha-composite the Sobel-filtered result into the output buffer. A smoothstep operation on the edge darkness value improves clarity by boosting blacks and whites and reducing gray.

Our pipeline has several options for adding 3D shading and depth:

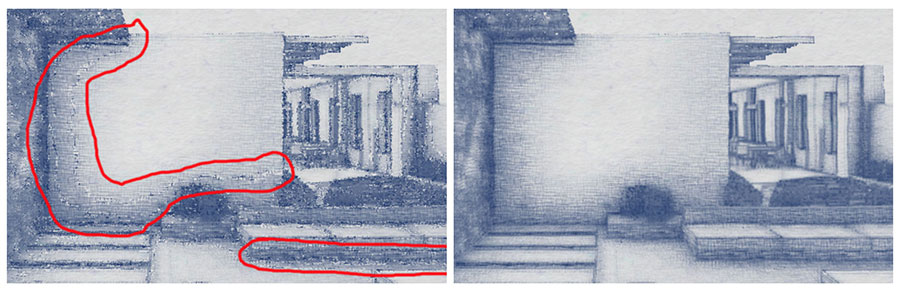

We implemented a cross-hatching shader based on Microsoft Research’s Real-Time Hatching paper. The basic idea is to apply a different hatch texture to a fragment depending on its value. The paper uses six different hatch and crosshatch textures.

Initially, we assumed six “steps” of value, one for each of the six textures. At each step, we blended two textures so that we would get a nice gradient across objects.

However, we found that this led to precision issues along the borders between sets of textures and we had very nasty artifacts. Instead, we decided to blend all six textures always (even if sometimes the weight of a particular texture is 0.0). This simple change vastly improved our results.

float shade(const in float shading, const in vec2 uv) {

float shadingFactor;

float stepSize = 1.0 / 6.0;

float alpha = 0.0;

float scaleHatch0 = 0.0;

float scaleHatch1 = 0.0;

float scaleHatch2 = 0.0;

float scaleHatch3 = 0.0;

float scaleHatch4 = 0.0;

float scaleHatch5 = 0.0;

float scaleHatch6 = 0.0;

if (shading <= stepSize) {

alpha = 6.0 * shading;

scaleHatch0 = 1.0 - alpha;

scaleHatch1 = alpha;

}

else if (shading > stepSize && shading <= 2.0 * stepSize) {

alpha = 6.0 * (shading - stepSize);

scaleHatch1 = 1.0 - alpha;

scaleHatch2 = alpha;

}

else if (shading > 2.0 * stepSize && shading <= 3.0 * stepSize) {

...

}

else if (shading > 3.0 * stepSize && shading <= 4.0 * stepSize) {

...

}

else if (shading > 4.0 * stepSize && shading <= 5.0 * stepSize) {

...

}

else if (shading > 5.0 * stepSize) {

...

}

shadingFactor = scaleHatch0 * texture2D(hatchTexture0, uv).r +

scaleHatch1 * texture2D(hatchTexture1, uv).r +

scaleHatch2 * texture2D(hatchTexture2, uv).r +

scaleHatch3 * texture2D(hatchTexture3, uv).r +

scaleHatch4 * texture2D(hatchTexture4, uv).r +

scaleHatch5 * texture2D(hatchTexture5, uv).r +

scaleHatch6;

return shadingFactor;

}

At this point, we were happy with the visual quality of our hatching but we weren’t happy with the performance as we needed 30 texture samples per pixel and branch-y logic.

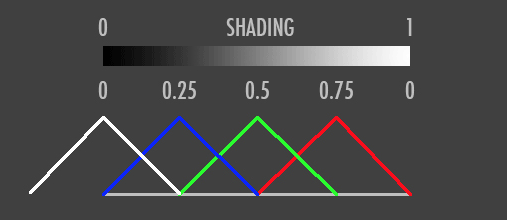

We decided to go down to four hatch textures instead of six, allowing us to pack all hatches into a single RGBA look up texture; one hatch per channel; one texture2D() sample per shade() call. Then we decomposed all the branches in shade() to create overlapping tent functions:

Each colored function represents the weight of a hatch texture, packing into the corresponding color channel, where shade() is just the dot product of our hatch lookup texture sample, and the shadeWeights.

To compute the weights, we construct the following function:

vec4 shadeWeights(const in float shading) {

vec4 shadingFactor = vec4(shading);

const vec4 leftRoot = vec4(-0.25, 0.0, 0.25, 0.5);

const vec4 rightRoot = vec4(0.25, 0.5, 0.75, 1.0);

return 4.0 * clamp(shadingFactor - leftRoot, vec4(0.0), rightRoot - shadingFactor);

}

In order to give the scene a more “sketchy” feel, we perturbed the UVs so lines would appear slightly wavy and hand drawn. The waviness is greatest at the center and fades out toward the edges to avoid artifacts at the borders of the screen. We perturbed the UVs based off of a cloud-like texture (warpVectorFieldTexture in the code below).

float uvDist = 1.0;

uvDist = min(vUV.s, uvDist);

uvDist = min(vUV.t, uvDist);

uvDist = min(1.0 - vUV.s, uvDist);

uvDist = min(1.0 - vUV.t, uvDist);

float smallAmplitude = 0.005;

float largeAmplitude = 0.01;

largeAmplitude *= uvDist;

smallAmplitude *= uvDist;

vec2 perturbedUV = texture2D(warpVectorFieldTexture, vUV * 2.0).xy * vec2(smallAmplitude);

perturbedUV += texture2D(warpVectorFieldTexture, vUV * 0.3).xy * vec2(largeAmplitude);

perturbedUV += vUV;

The hatching shader is laid over whatever shading is chosen. We found that using the shading values from the ambient occlusion led to only the horizontal hatching textures being used, since there were no very dark values. We wanted the extra definition that crosshatching can give, so we faked it by flipping the texture, scaling it, and applying it again.

This is the calculation with our original shade() function:

float hatching = shade(shading, vUV * 1.5) * shade(shading, vUV.yx * 3.0);

This simple change gives us a greater value range and puts more emphasis on the edges.

Check out the full fragment shader.

We’re very happy with the end result, especially as the first example of the flexibility of our rendering engine to support different styles. In the future, we hope to expand the use cases for “sketch mode” (Oculus?) and add more fun, stylistic options.

At Floored we read a lot of different stuff from all over the web. Whenever someone comes across something that seems particularly useful, interesting, or just plain cool, we all get an email about it. Most of these things are new, but some we may just have discovered recently, either way they’re pretty interesting. Enjoy!

Oculus Rift + GoPro = virtual surgery video for students and residents from surgeon’s point of view.

Usually infrared tools cost thousands of dollars, but this is only $200!

On August 11 hell froze over.

Hyperlapse from Microsoft Research: smooth first person timelapses - video, technical overview.

Nifty WebGL art gallery from fashion label Kenzo.

A paper from CMU describes a method for manipulating objects in photographs by completing their geometry from available 3D models.

Oculus Rift simulator.

We don’t always wake up early, but when we do it’s for BEACH DAY!!!!!

Last week, we fortified ourselves with monster-sized bagels and bused it all the way down to Long Branch, New Jersey to do our “Office Ditch Day” as a team.

We’ve grown a lot in the last few months so we needed not one but TWO buses fully equipped with DVD players and air conditioning. Flooredians (Floored Employee), plus family and friends, had the option of watching Fast and Furious 6 or The Lego Movie.

Thanks to one Jason Gelman, our Head of Business Operations, we landed the super sweet venue Avenue Beach Club. By super sweet we mean unlimited drinks and eats in addition to a rooftop pool over which we had complete control.

Proof:

What would a Floored event be without swag?